Search for answers or browse our knowledge base.

What does the public think about AI?

0 out of 5 stars

| 5 Stars | 0% | |

| 4 Stars | 0% | |

| 3 Stars | 0% | |

| 2 Stars | 0% | |

| 1 Stars | 0% |

Surveying public opinion

A number of surveys of the publics view of AI have been conducted over the last few years by organisations such as The Pew Centre, Edleman and the AI Policy Institute. Most surveys have focussed on the USA but Edleman’s 2024 Trust Barometer provides some interesting data from 28 countries.

Developed economies more cautious than developing countries

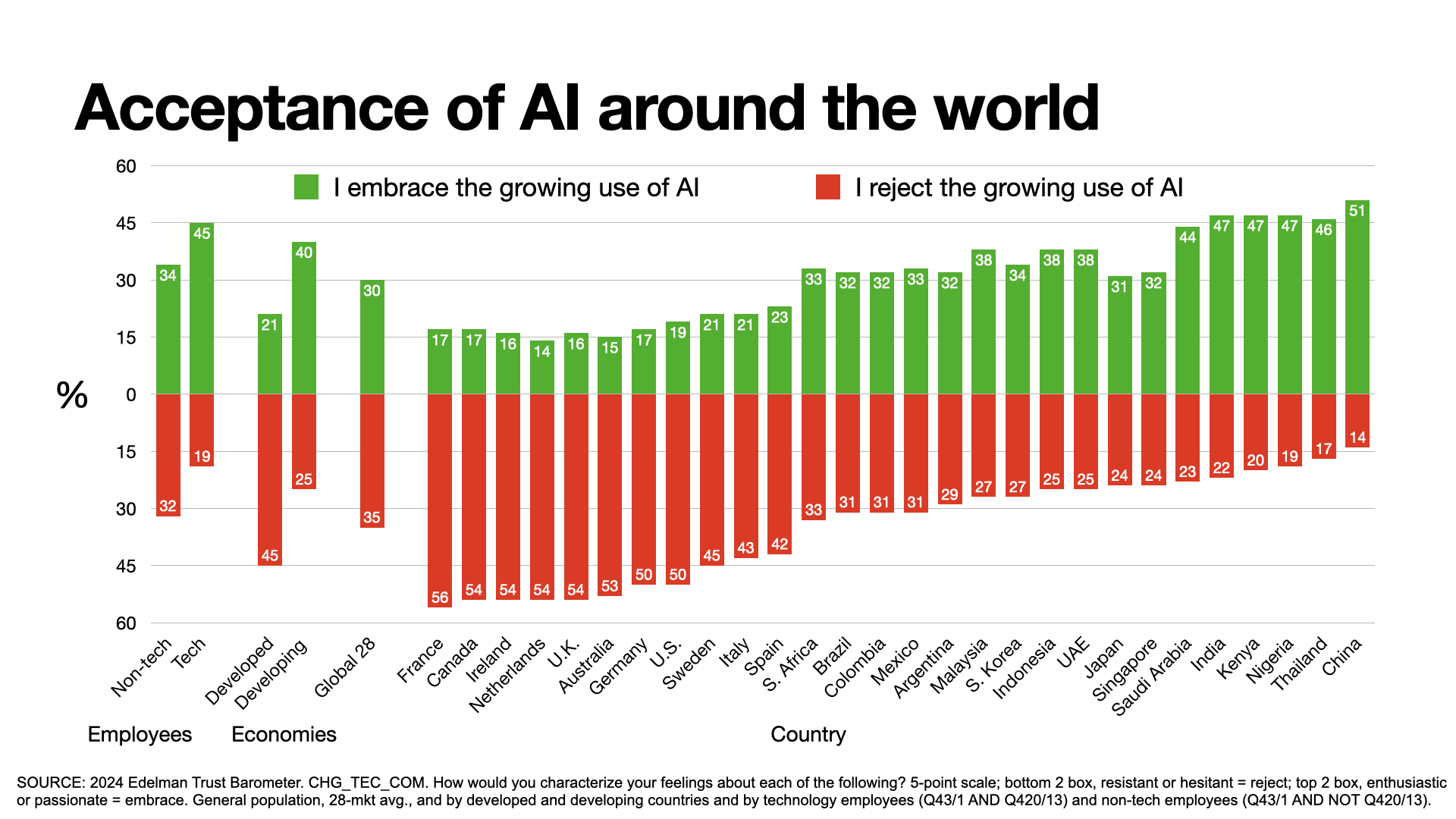

Edleman question people about their feelings about AI, whether they were resistant or hesitant, enthusiastic or passionate. The graph below shows the survey results based on their published data in the 2024 Trust Barometer.

The graph also shows the difference between developing and developed economies as well as between non-tech and tech employees of companies.

Overall, developing economies are far more cautious about accepting its use than developing countries. This may be a reflection of their exposure to the technology although authoritarian countries like China have a high exposure in some cities but still seem to show an enthusiasm for its adoption.

Concern in America outweighs excitement

The Pew Research Centre survey survey conducted in 2023 also supports Edleman’s finding for the USA with a majority of respondants feeling more concerned than excited. The Pew Centre data also shos a significant uptick in concern compared to previous years, probably because of the hype surrounding generative AI applications like ChatGPT.

Age makes a difference

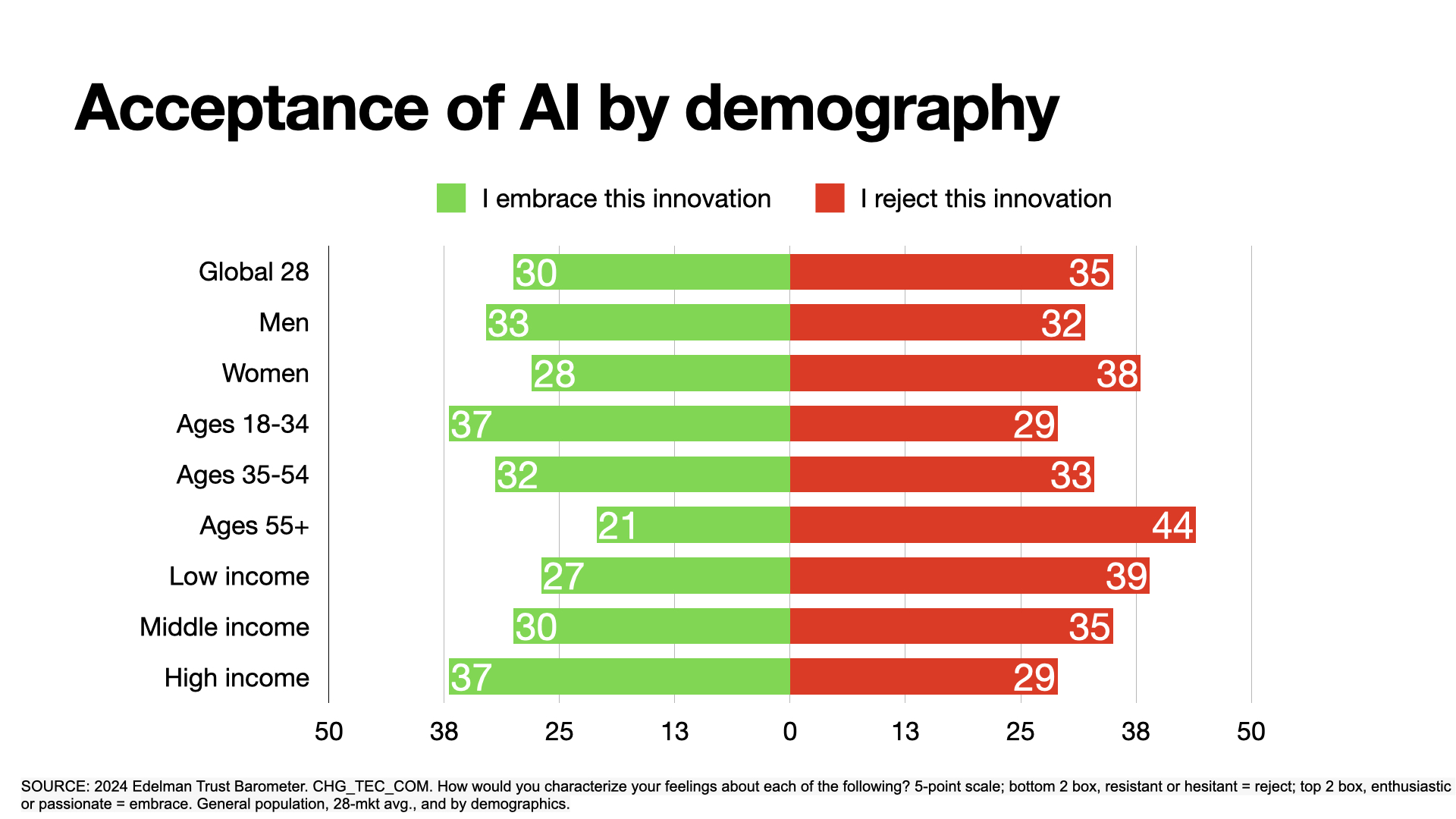

Edleman’s survey also analysed the difference in acceptance or rejection by age, sex and income level. Age clearly makes a difference as the graph below illustrates with older people being less enthusiastic than the 18-34 year olds. Likewise higher income earners are more positive than low income workers.

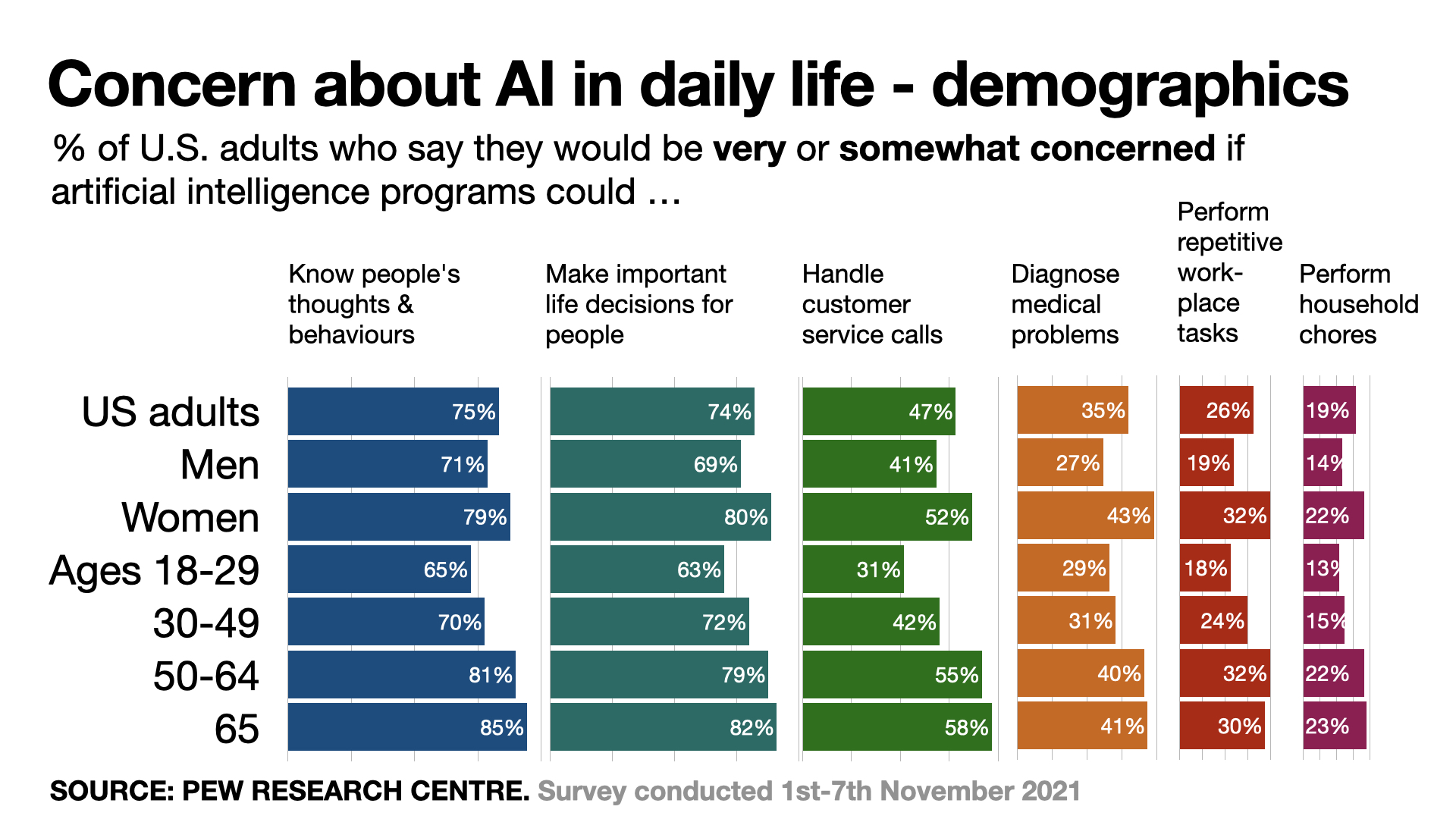

AI use an influence on concern

The PEW Research Centre survey carried out in November 2021 sheds some further light on how the intended application of AI influences peoples concern. Applications that might know peoples thoughts or behaviours or make important decisions for them elicit the greatest concern whilst applications that would perform household chores are of the least concern. As with the Edleman Global 28 survey, age again makes a difference. In the PEW survey, older people are more concerned than younger across all applications surveyed. Women are consistently more concerned than men across all applications by a significant margin.

Specific concerns about AI harmfulness

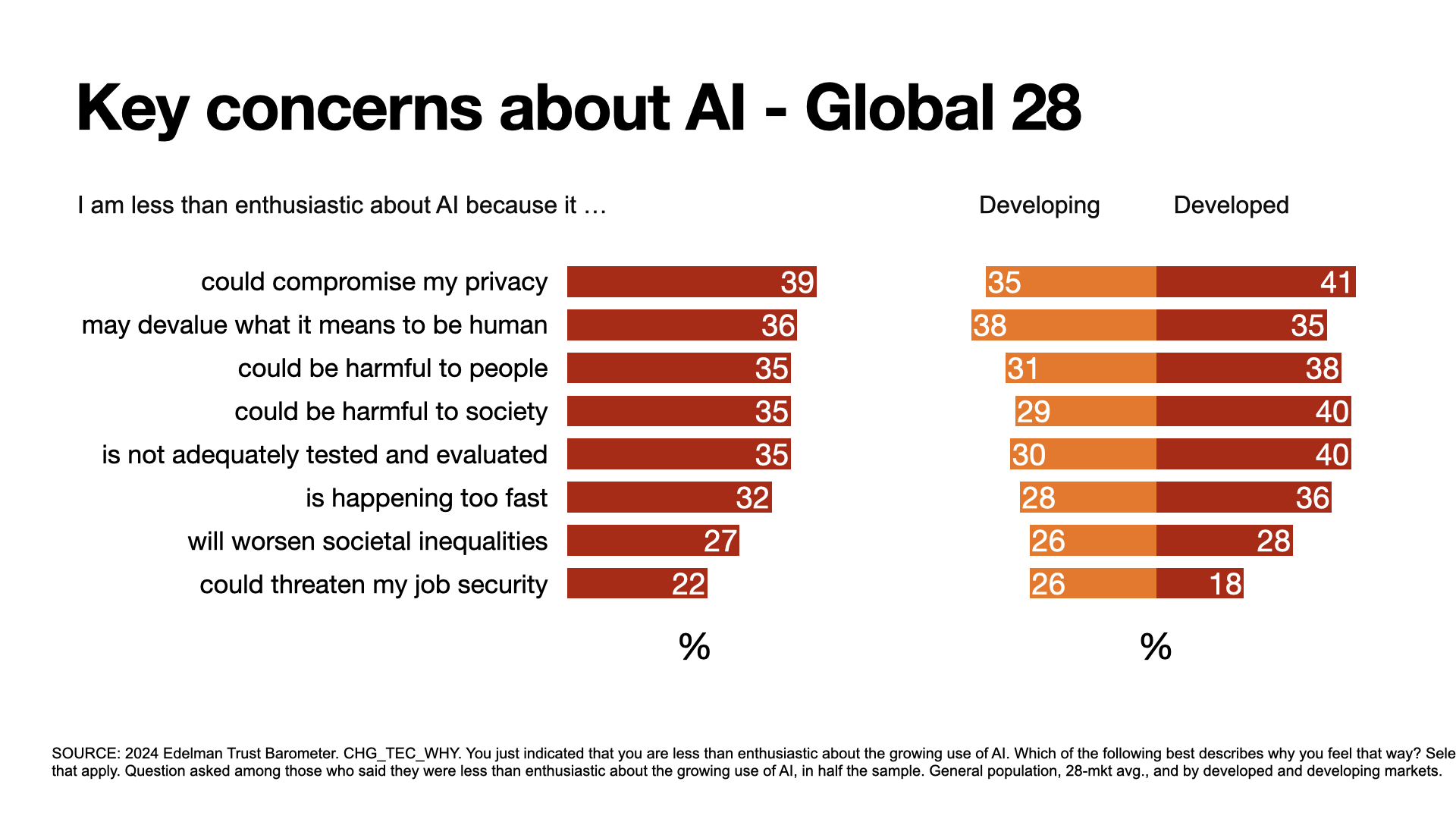

The previous chart showed the concerns those surveyed had about a number of specific applications for AI. When people were asked in the Edleman 2024 Trust Barometer survey why they were less enthusiastic about AI, they provided a number of reasons with privacy coming out on top, followed closely by a concern that AI may devalue what it means to be human along with concerns that AI may be harmful to people and society. Interestingly job security comes out as the least of peoples concerns about AI.

The chart also shows how these concerns differ between developed and developing economies.

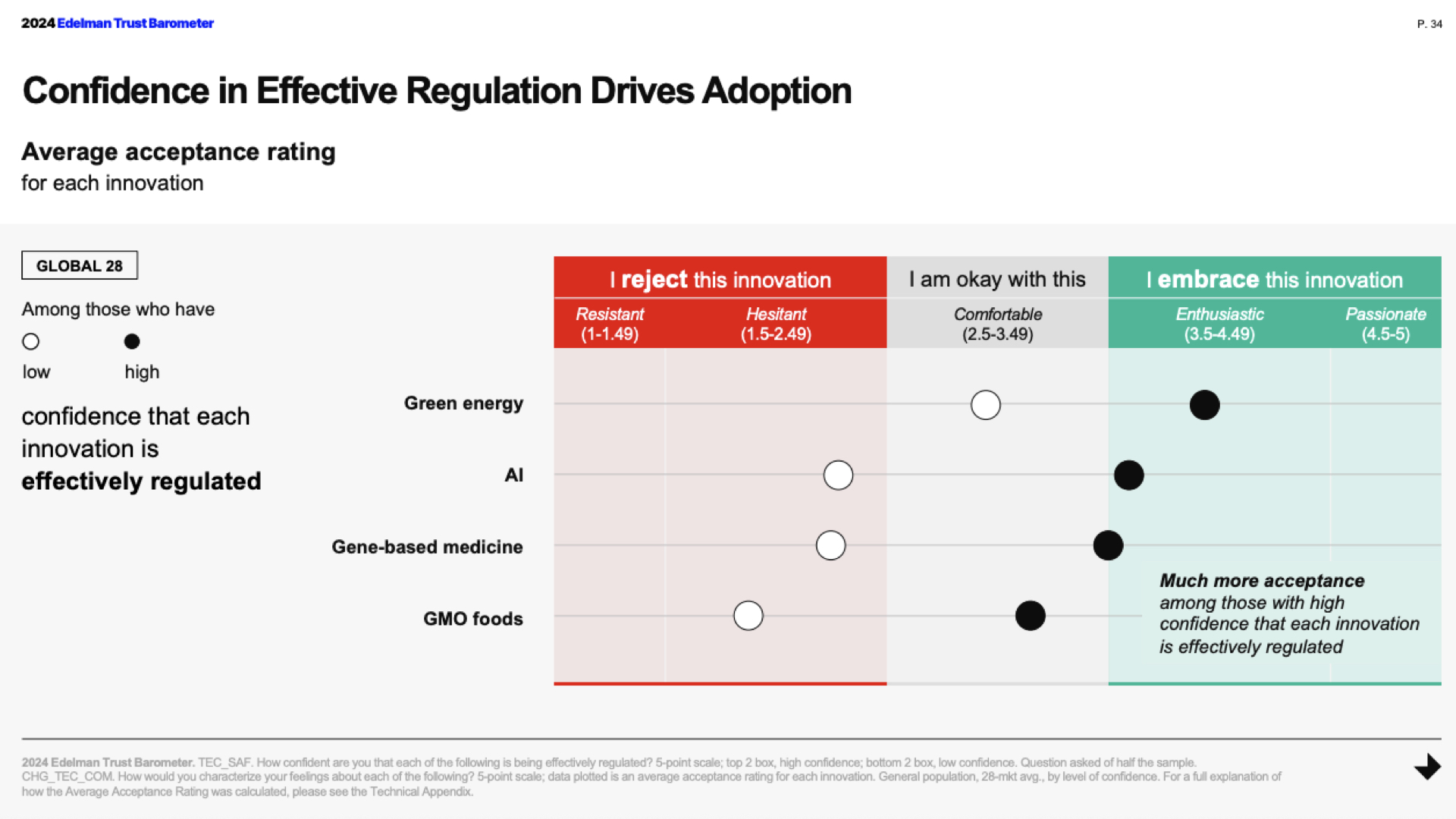

Regulation could help adoption of AI

Edleman’s 2024 Trust Barometer survey also looked at various factors that influence peoples trust of technology and the institutions promoting or regulating it. It was found that most developed countries have a strong distrust of their governments and their competence to effectively regulate technologies like AI.

The chart below from Edleman’s survey illustrates how there is a much higher acceptance of Green energy and no rejection, compared to AI innovation where there is resistance and only a moderate acceptance. Acceptance of ‘green” innovation may be due to changes in regulation and the publics perception of the importance of reducing reliance on fossil fuels over the last decade .

2024 will be a defining year for AI and how our political leaders choose to deal with it,

Daniel Colson

CEO, AI Policy InstituteAmericans Want Government Action On AI

The New York Times filed a lawsuit against Open AI and Mcrosoft for copyright infringement in the use of their articles to train ChatGPT. The AI Policy Institute conducted a survey of 1,264 voters in the USA on 1st January 2024, finding that 59% agreed with the lawsuit whilst only 18% disagreed. The poll also found that 70% of voters agreed that AI companies should compensate news outlets like the New York Times if they want to use their articles to train their models.

65% of voters said Congress should pass legislation regarding artificial intelligence in 2024, agreeing with the statement that “the effects of AI are already being seen in society and it’s time to act”.

AI Policy Institute

Voter poll 1 Jan 2024References

2024 Edelman Trust Barometer: Global Study. https://www.edelman.com/trust/2024/trust-barometer

2024 Edelman Trust Barometer: Supplemental Report, Insights for the Tech Sector. https://www.edelman.com/trust/2024/trust-barometer/special-report-tech-sector

Pew Research Centre: WHat the data says about Amercians views of AI. https://www.pewresearch.org/short-reads/2023/11/21/what-the-data-says-about-americans-views-of-artificial-intelligence/

AI Policy Institute: Voters Support NYT’s Lawsuit vs. OpenAI, Want Congressional Action on AI in 2024, Disclosures on AI Use in Political Ads, Slowdown on AI Development. https://theaipi.org/poll-biden-ai-executive-order-10-30-8/

0 out of 5 stars

| 5 Stars | 0% | |

| 4 Stars | 0% | |

| 3 Stars | 0% | |

| 2 Stars | 0% | |

| 1 Stars | 0% |