Search for answers or browse our knowledge base.

How do some applications of AI harm humanity?

0 out of 5 stars

| 5 Stars | 0% | |

| 4 Stars | 0% | |

| 3 Stars | 0% | |

| 2 Stars | 0% | |

| 1 Stars | 0% |

Six areas of harm

Whilst there are many ways in which AI applications can benefit us, there are also potential harms that come form the use of certain types of AI application, like surveillance, self drive vehicles, automated decision making and simulated humanness.

Enlightenment thinking has led many in the West to believe that progress is good and will result in human flourishing. Technology progress is automatically assumed to be good for humanity and we often think that new is better than old. The obsession that we have with technology is however damaging what it means to be a human being as we have seen in how AI algorithms in social media – designed to drive user engagement and profit – are dividing society and causing harm to children.

Loss of cognitive acuity

Cognitive acuity is diminished through reliance on decision support systems (e.g. in financial, judicial and medical areas). In addition, these systems incorporate bias and lack transparency in respect of any decision reached. Such systems only deliver a probability. It is naïve to think that such algorithms can be made transparent nor free of bias, given that they are usually stochastic processes, not rule based, and data will always be biased because humans are biased. Decisions that impact individuals and groups must always have human oversight and a right of appeal with such process involving human assessment and judgement only.

Damage to relationships

Relationships are damaged through over engagement and reliance on digital assistants and a drive to create ever more realistic simulations of humanness. Whilst we do not propose an outright ban on such devices, we believe that it should be a requirement that users should always know that they are interacting with an artefact, not a human. There is a need for more empirical research in this area regarding harms. Research should be conducted on methods to ensure that such artefacts do not appear human (e.g. using non-human voices). The evaluation of a user’s emotions, personality and character by AI based artefacts, simulating a dialogue should be banned (e.g. Interviewing systems).

Loss of freedom & privacy

Freedom and privacy is lost through the use of private data and surveillance of citizens, whether by the state or private companies. The use of AI to monitor, track, and identify citizens from facial or other personal attributes is unprecedented in any civilisation and is quite different from the use of other biometrics such as fingerprints. We believe that the European Commission and other governments are well aware of these dangers and action is required urgently to avoid mass surveillance being normalised.

Although not involving AI, the deployment of Covid-19 tracking apps has brought this prospect even closer. We urge an outright ban on the states use of AI based surveillance technologies. An even greater level of surveillance has already been established in the private sector through Big Tech’s use of a user’s browsing data, shopping activity and a host of other data gatherers such as FitBit health monitors. Much of humanity has already lost its freedom and autonomy! GDPR legislation in Europe needs strengthening to avoid the extraction and use of personal data. We believe that the practice of companies providing free services or products in exchange for data should be banned without explicit and informed consent.

Loss of moral agency

In assigning moral agency to artefacts such as autonomous weapons and self-drive vehicles, humans are loosing their moral agency. This should be banned and it should be a requirement that human decision making is required where life is at risk. In other words, humans should be responsible for moral decisions.

Loss of reality & addiction

We are in danger of losing a sense of what is real and embodied through the over use of Augmented and Virtual Reality systems. Research is needed in this area to provide more empirical evidence and to inform potential health warnings for the use of such devices. There should also be strong human oversight and a cautious approach to application development to protect the harms to humanity that can occur from users losing touch with the real world and real relationships.

Loss of the dignity of work

AI systems, including robotics is already changing the workplace and displacing jobs. We believe that there is human dignity in work and that alternative work must be a condition of job replacement by AI systems and robotics, except where such systems preserve life in carrying out hazardous tasks.

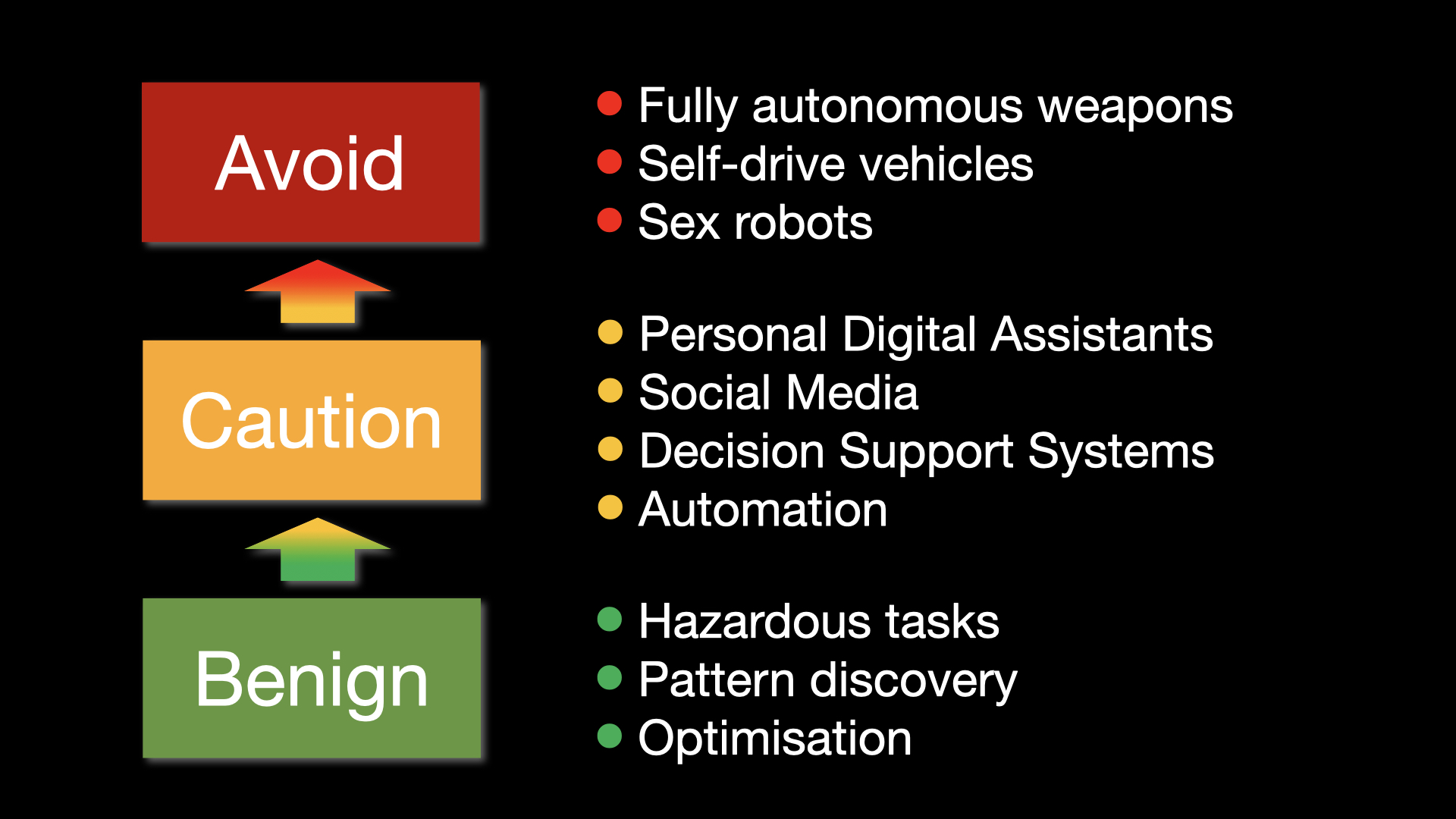

A spectrum of risk

AI applications pose a spectrum of risk to humanity with many being benign, some requiring care in their use whilst others ought to be off limits. High risk applications are a bone of contention between Big Tech companies who are developing them and politicians who want to see their countries prosper but who also have a responsibility to protect citizens. The general public are often ignorant of the threats but there is a strong lobby from groups concerned with protecting human rights.

0 out of 5 stars

| 5 Stars | 0% | |

| 4 Stars | 0% | |

| 3 Stars | 0% | |

| 2 Stars | 0% | |

| 1 Stars | 0% |