Search or browse our knowledge base for information on AI, human values at risk, case studies, policy & standards around the globe and AIFCS advocacy.

Copyright Infringement

What's all the fuss?

It’s now widely acknowledged, even by AI companies, that copyright data has been extensively used to train Large Language Models (LLMs) used in Generative AI systems like ChatGPT. Whats at issue is whether this is “fair use” of creators content, as the AI companies claim, or whether permission or licensing should have been sought first.

AI is not being developed in a safe and responsible, or reliable and ethical way. And that is because LLMs are infringing copyrighted content on an absolutely massive scale.

Dan Conway, CEO, The Publishers Association.

To license or not to license

AI companies are developing AI for commercial reasons so there is a clear difference between that and normal people learning from books and articles for their own non commercial interest. With Open AI backed by Microsoft that has a valuation around that of the UK’s GDP, is it really a credible argument that licensing will stifle innovation!

The principle of when you need a licence and when you don't is clear: to make a reproduction of a copyright-protected work without permission would require a licence or would otherwise be an infringement. And that is what AI does, at different steps of the process – the ingestion, the running of the programme, and potentially even the output. Some AI developers are arguing a different interpretation of the law. I don't represent either of those sides, I'm simply a copyright expert.

Dr Hayleigh Bosher, Reader in Intellectual Property Law, Brunel University London

New York Times Lawsuit

The New York Times (NYT) filed a lawsuit in December 2023 against Open AI and Microsoft, it’s financial backer, for infringement of NYT copyright articles scrapped from its website to train OpenAI large language model (LLM) in ChatGPT.

Whether the suit will go to trial remains to be seen but it is an important case that could determin the legitimacy of LLMs trained on vast amounts of publicly available (but not licensed) material – whether text, images, video or computer code.

If they (New York Times) can't protect their content as the largest, most influential voice on the internet who has content worth protecting, then everybody else loses their ability to protect themselves from what is essentially the bully in the room, which is Microsoft and OpenAI,

Davi Ottenheimer, Vice President of Trust and Digital Ethics at Inrupt, March 2024

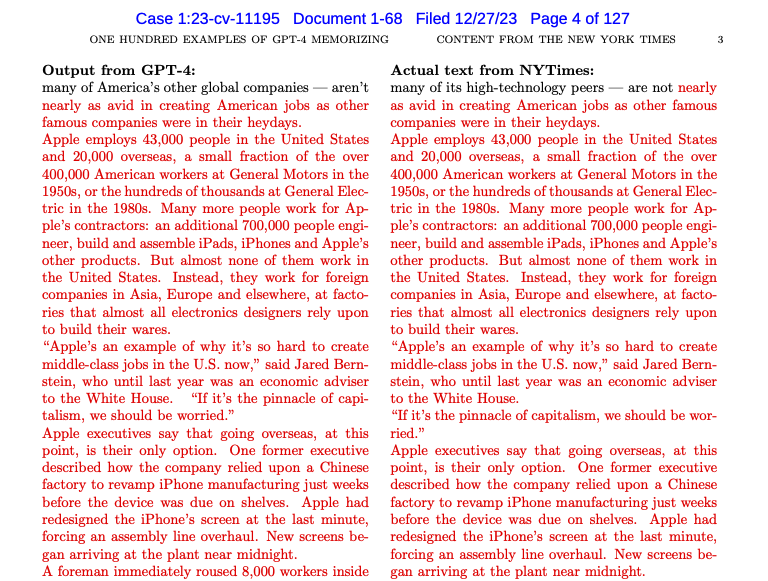

Protecting Society

Smaller organisations and the individual don’t have the power or financial muscle to take on BigTech and the outcome of any trial will be significant in determining whether our data on the internet is protected. BigTech claims that training LLMs on publicly available and even copyright data is “fair use” because they do not exactly reproduce the original material. This is not strictly true as an exhibit in NYT suit shows, listing 100s of example of output generated by ChatGPT4 that are identical to the NYT articles.

Whether ChatGPT4 or any LLM generates output exactly the same as the original or not seems beside the point as far as protecting copyright material. LLMs could not be developed without being trained on vast amounts of quality data. The product is therefore based , in many cases, on a lot of copyright material. Tech experts have admitted that these models would not exist without it. If companies want to use copyright data they should licence it. It also raises questions about whether it is fair to profit from ordinary citizens data that exists on the web, copyrighted or not.

Copyright law is not really just about protecting the content creator; it's actually about protecting society by encouraging creation, If creators don't have content controls, which is what OpenAI and Microsoft are arguing ... then they're undermining society.

Davi Ottenheimer, Vice President of Trust and Digital Ethics at Inrupt, March 2024

Inhibiting Innovation

Microsofts counter claim is that Media companies are trying to stiflle innovation. Indeed Sam Altman visited the UK to seek exemption from Copyright Law for LLMs, in order to advance AI.

They're saying The New York Times is trying to inhibit innovation here and that these two industries -- the AI tech world and the media -- can actually coexist and be symbiotic,

Davi Ottenheimer, Vice President of Trust and Digital Ethics at Inrupt, March 2024

UK House of Lords Report

The UK House of Lords Communications and Digital Committee took evidence from 41 expert witnesses, reviewed over 900 pages of written evidence, held roundtables with small and medium sized businesses hosted by the software firm Intuit, and visited Google and UCL Business during it’s inquiry into LLMs and Generative AI. Their report was published in February 2024, making a number of recommendations to the UK Government about Generative AI development and direction, including the need to balance risks and innovation.

LLMs may offer immense value to society. But that does not warrant the violation of copyright law or its underpinning principles. We do not believe it is fair for tech firms to use rightsholder data for commercial purposes without permission or compensation, and to gain vast financial rewards in the process. There is compelling evidence that the UK benefits economically, politically and societally from upholding a globally respected copyright regime.

House of Lords, Report on LLMs and Generative AI, February 2024

AI companies pushed back on the issue of copyright infringement, as one might have expected, arguing that it constituted “fair use” or that “it was needed to benefit citizens”. Notwithstanding these arguements, other legal opinion contributed to their recommendation on copyright that:

"The Government should prioritise fairness and responsible innovation. It must resolve disputes definitively (including through updated legislation if needed); empower rightsholders to check if their data has been used without permission; and invest in large, high‑quality training datasets to encourage tech firms to use licenced material."

House of Lords, Report on LLMs and Generative AI, February 2024

Companies should be responsible for harmful content

Copyright infringement is not the only issue with LLMs. There are many examples of biased or harmful text and image output being generated by Generative AI models. The likelihood is that it will not be possible to eliminate all factual errors (hallucinations) or harmful images, regardless of ‘red teaming’ to test systems and the use of ‘guard rails’ to avoid inappropriate output.

Microsoft can't escape the harmful consequences of the materials generated by its AI tools and will inevitably have to devote vast resources to moderating and stress testing their model and content.

Tinglong Dai, Professor, Johns Hopkins University Carey Business School, March 2024.

References

Esther Ajao, Microsoft whistleblower, OpenAI, the NYT, and ethical AI, TechTarget, 7 March 2024 https://www.techtarget.com/searchenterpriseai/news/366572699/Microsoft-whistleblower-OpenAI-the-NYT-and-ethical-AI?utm_campaign=20240313_Elon+Musk+plans+to+take+xAI+chatbot+Grok+open+source&utm_medium=email&utm_source=MDN&asrc=EM_MDN_289964931&bt_ee=NCxKdplO10yX0h1r0ulBlCpftTRhrJ%2BlIv9EtvXepvrmVkyFR5hSniN8NzgxEyFJG2%2BzyQ57yc6vBeeuaL084g%3D%3D&bt_ts=1710338801165

House of Lords Communications and Digital Committee, 1st Report of Session 2023–24, HL Paper 54, Large language models and generative AI. https://publications.parliament.uk/pa/ld5804/ldselect/ldcomm/54/54.pdf

Developing Trust

Human Values Risk Analysis

Authentic Relationships

LOW RISK

Privacy & Freedom

HIGH RISK

Uses copyright data

Moral Autonomy

LOW RISK

Cognition & Creativity

MEDIUM RISK

Can reduce critical thinking

Governance Pillars

Transparency

Companies opaque about what data they trained LLMs on although most acknowledge copyright data used.

Justice

Copyright clearly has been infringed, law suites currently the only redress.

Accountability

Companies should be held to account for infringement, does law need amending or clarifying?

Policy Recommendations

Organisations deploying a chatbot for use by the public or clients must be accountable for the output of the chatbot, even if it is in error or conflicts with other publicly viewable data. Legislation may be needed to assign ‘product’ liability where the chatbot is the ‘product’.

Copyright protection should be enforced and no exception made for AI companies. Chapter 8 of the House of Lords Report cited, deals with Copyright in some detail and highlights various policy options and limitations of different approaches, such licensing and opt in or opt out of data crawling on websites.

Developers and companies should be required to make information available on what data their system has been trained on.